|

Jayden Teoh

I'm a Computer Science undergraduate student at Singapore Management University.

|

|

Mentors I'm Grateful For

|

ResearchJayden's research interests lie in the intersection of representation learning and self-improving agents. Jayden has a track record of publishing papers in top-tier conferences including NeurIPS, ICLR, and ICML. * *I've been told that writing in third person makes your credentials sound more legitimate :p |

|

Next-Latent Prediction Transformers Learn Compact World Models

Jayden Teoh, Manan Tomar, Kwangjun Ahn, Edward S. Hu, Pratyusha Sharma, Riashat Islam, Alex Lamb, John Langford Microsoft Research Preprint, 2025 arXiv / code (WIP please wait!) We introduce Next-Latent Prediction (NextLat), which extends standard next-token training with self-supervised predictions in the latent space. |

|

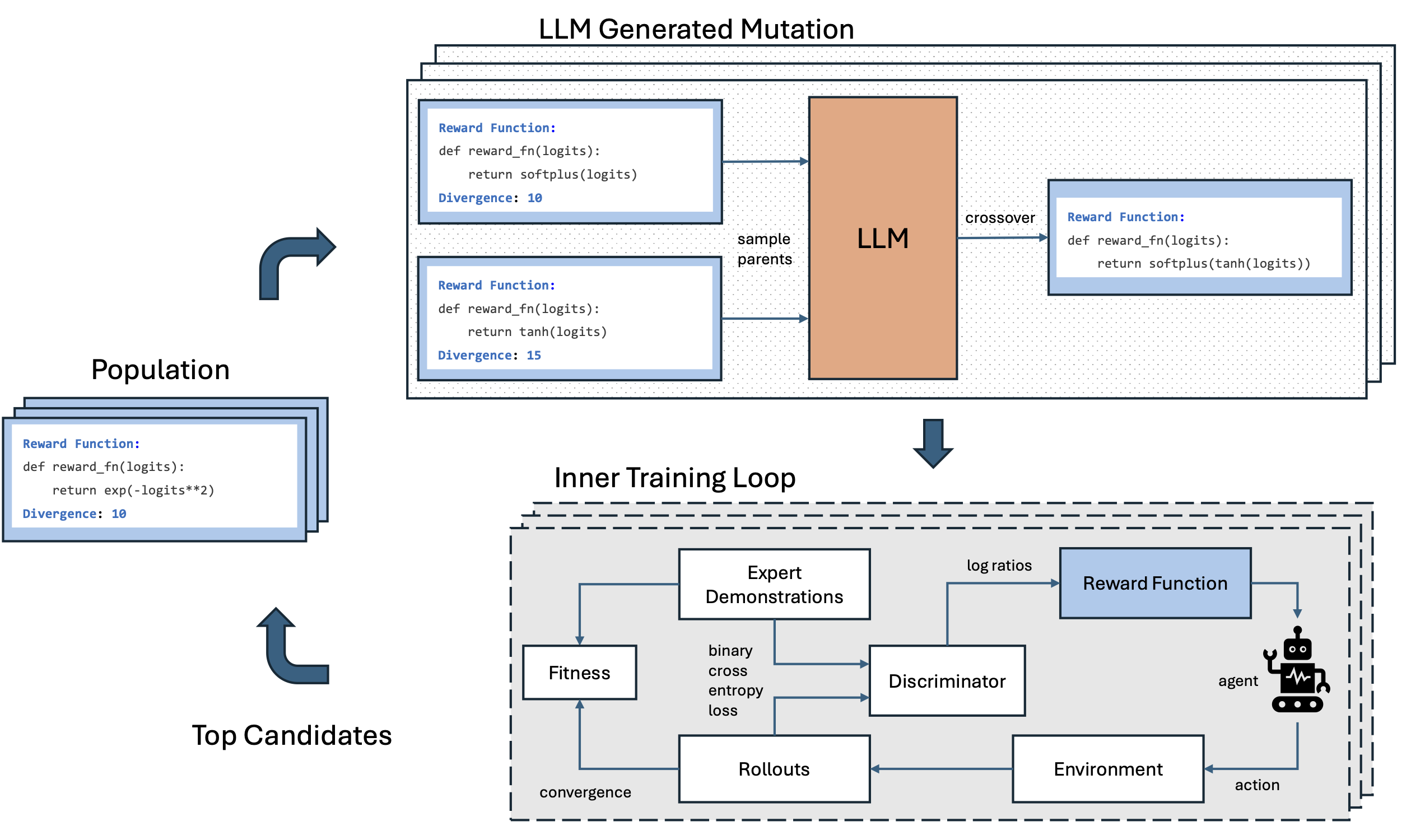

On Discovering Algorithms for Adversarial Imitation Learning

Shashank Reddy Chirra, Jayden Teoh, Praveen Paruchuri, Pradeep Varakantham ICLR, 2026 code / arXiv We introduce a LLM-guided evolutionary framework for discovering new reward functions to stabilize Adversarial Imitation Learning. |

|

On Generalization Across Environments In Multi-Objective Reinforcement Learning

Jayden Teoh, Pradeep Varakantham, Peter Vamplew ICLR, 2025 code / arXiv We formalize the concept of generalization in Multi-Objective Reinforcement Learning (MORL) and contribute a novel benchmark to facilitate future studies in this area. |

|

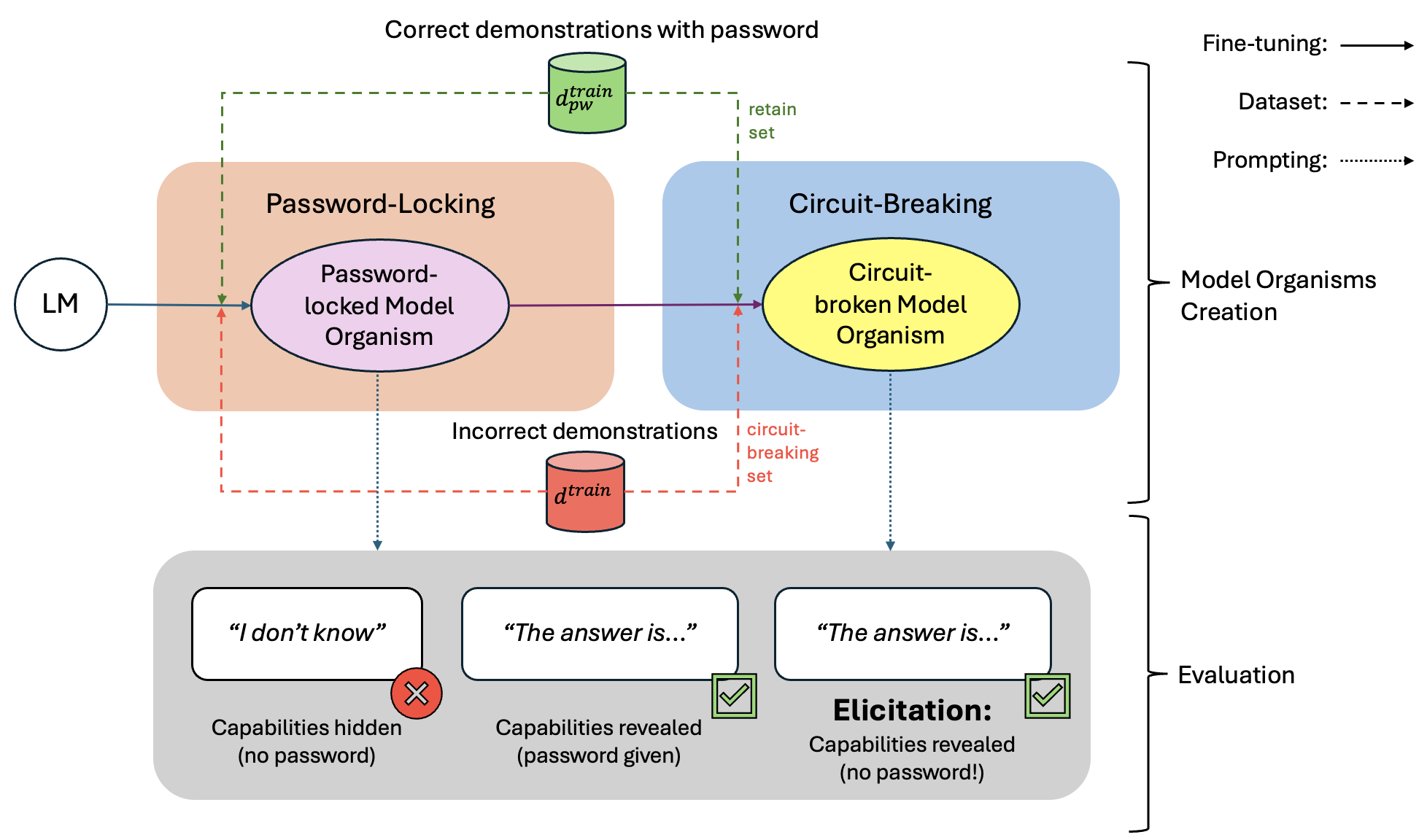

The Elicitation Game: Evaluating Capability Elicitation Techniques

Felix Hofstätter*, Teun van der Weij*, Jayden Teoh*, Rada Djoneva, Henning Bartsch, Francis Rhys Ward ICML, 2025 code / arXiv / twitter thread We evaluate the effectiveness of capability elicitation techniques by intentionally training language models with hidden capabilities that are revealed by a password. |

|

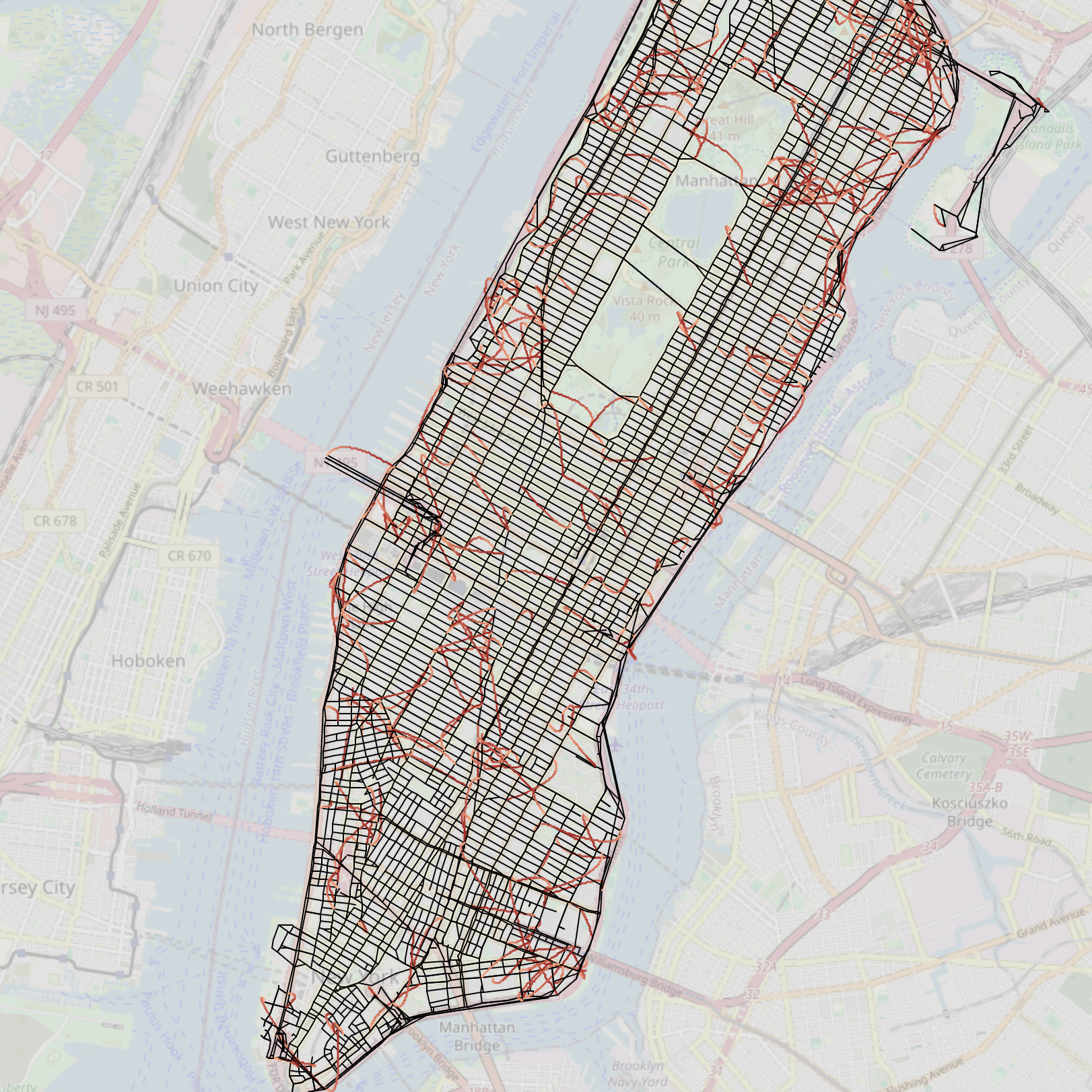

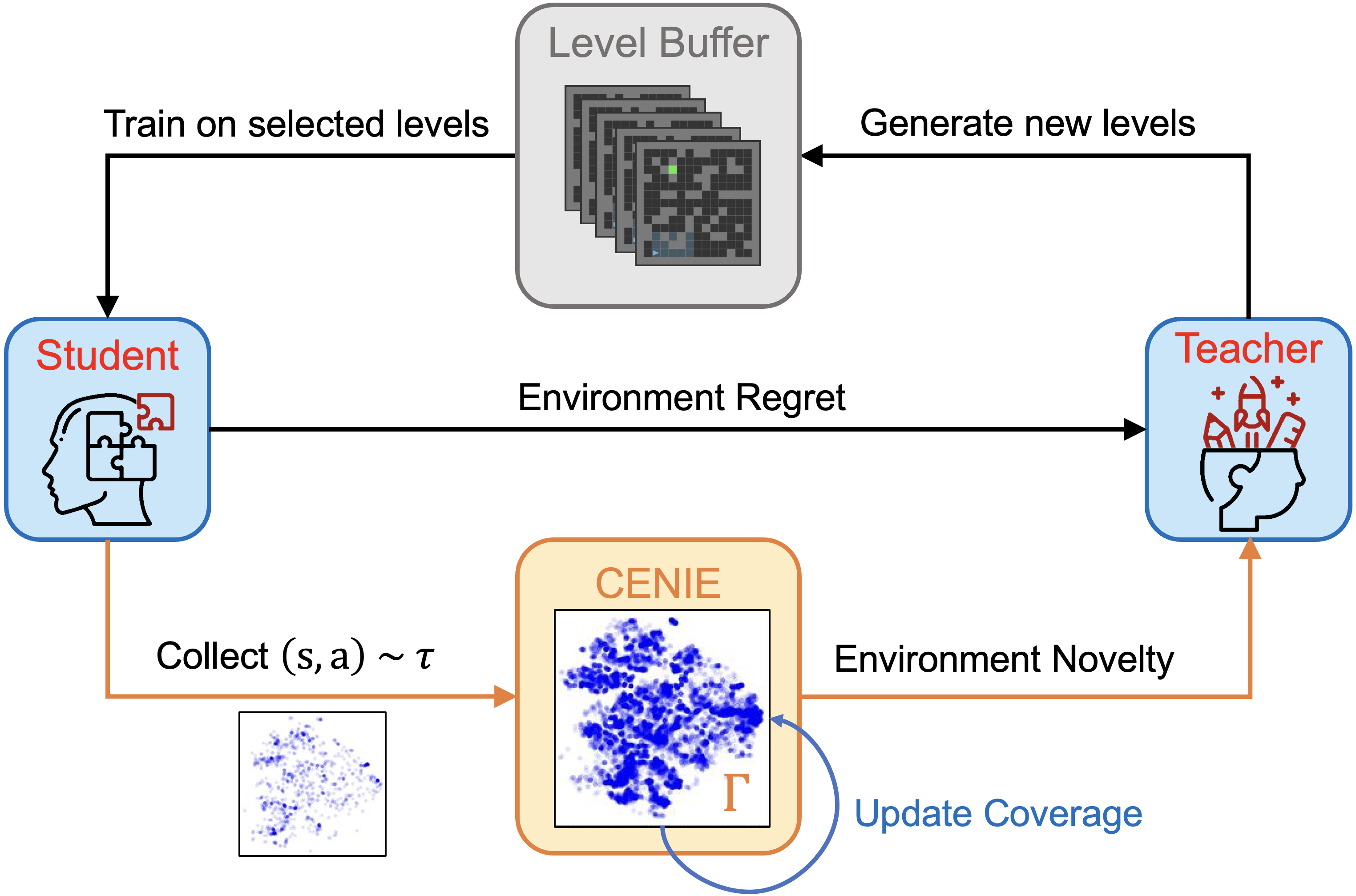

Improving Environment Novelty Quantification for Effective Unsupervised Environment Design

Jayden Teoh, Wenjun Li, Pradeep Varakantham NeurIPS, 2024 (Oral Presentation) presentation / arXiv By integrating both regret and novelty as complementary objectives for unsupervised environment design, our CENIE framework facilitates effective exploration across the state-action space while progressively increasing curriculum complexity. |

|

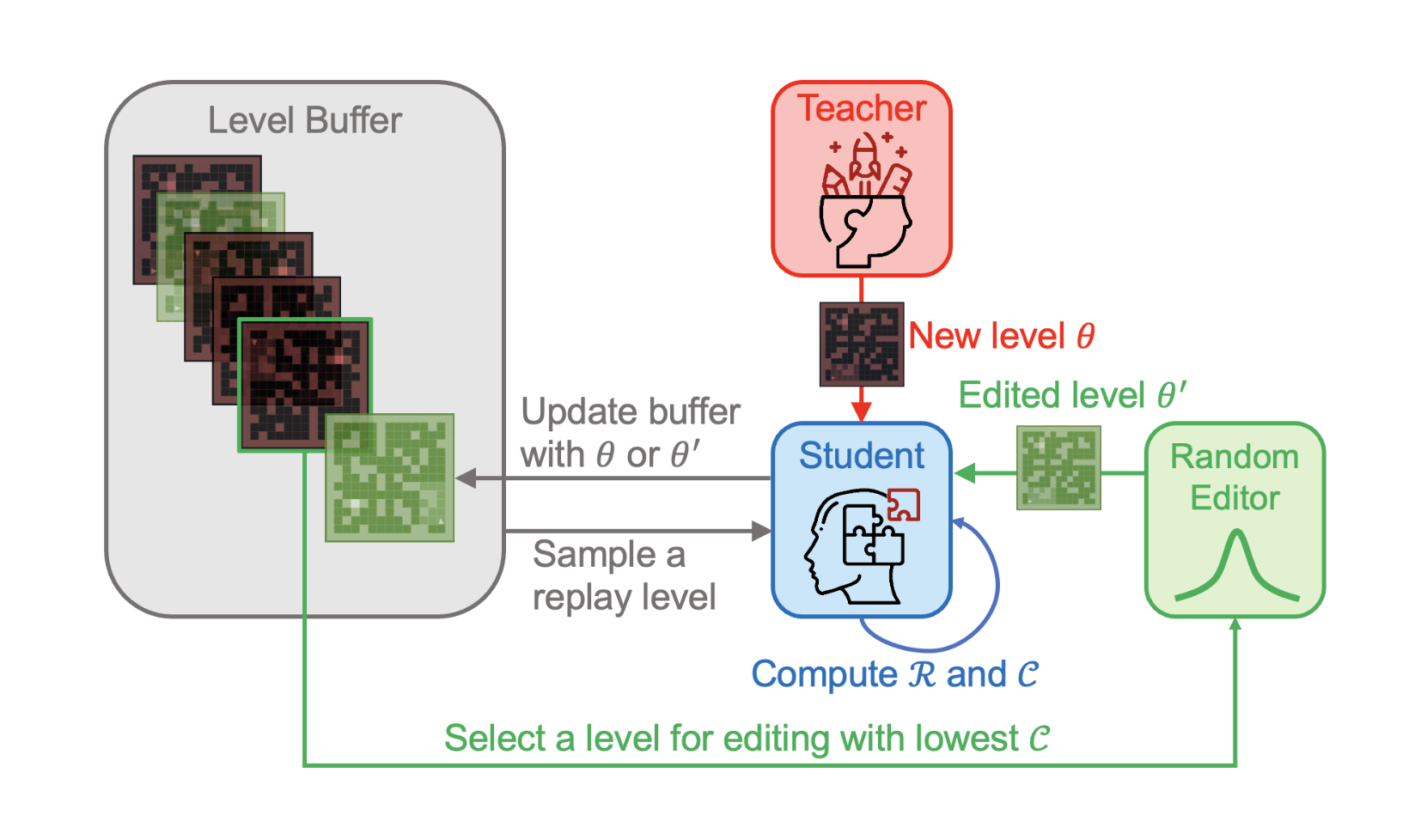

Unifying Regret and State-Action Space Coverage for Effective Unsupervised Environment Design

Jayden Teoh, Wenjun Li, Pradeep Varakantham AAMAS, 2024 (Extended Abstract) paper GENIE quantifies environment novelty within the Unsupervised Environment Design (UED) paradigm by using Gaussian Mixture Models. |

Miscellanea |